Introduction to UQLM

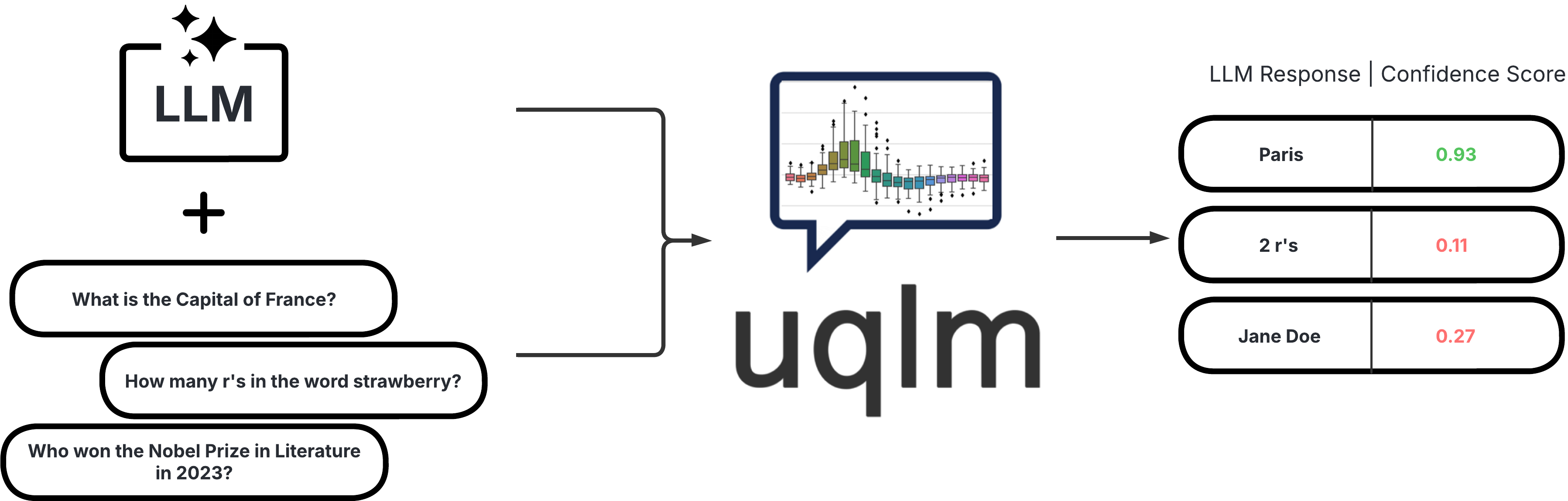

In the rapidly evolving field of artificial intelligence, ensuring the reliability of Large Language Models (LLMs) is paramount. UQLM (Uncertainty Quantification for Language Models) is a powerful Python library designed to tackle the challenge of hallucination detection in LLMs using state-of-the-art uncertainty quantification techniques. This blog post will delve into the features, installation, usage, and community aspects of UQLM, providing developers and tech enthusiasts with a comprehensive overview.

What is UQLM?

UQLM is a Python library that provides a suite of response-level scorers for quantifying the uncertainty of outputs generated by LLMs. By returning confidence scores between 0 and 1, UQLM helps developers assess the likelihood of errors or hallucinations in model responses. The library categorizes its scoring methods into four main types:

- Black-Box Scorers: Consistency-based methods that do not require access to internal model states.

- White-Box Scorers: Token-probability-based methods that leverage internal probabilities for faster and cheaper assessments.

- LLM-as-a-Judge Scorers: Customizable methods that utilize one or more LLMs to evaluate the reliability of responses.

- Ensemble Scorers: Methods that combine multiple scorers for a more robust uncertainty estimate.

Key Features of UQLM

- Comprehensive Scoring Suite: UQLM offers a variety of scoring methods tailored to different use cases.

- Flexibility and Customization: Users can easily customize their scoring methods and integrate them into existing workflows.

- Robust Documentation: The library comes with extensive documentation and example notebooks to facilitate easy adoption.

- Active Community: UQLM encourages contributions and collaboration, making it a vibrant part of the open-source ecosystem.

Installation Process

Installing UQLM is straightforward. The latest version can be easily installed from PyPI using the following command:

pip install uqlmOnce installed, you can start integrating UQLM into your projects to enhance the reliability of your LLM outputs.

Usage Examples

UQLM provides various methods for hallucination detection. Below are examples of how to use different scorer types:

Black-Box Scorers

To use the BlackBoxUQ class for hallucination detection, you can follow this example:

from langchain_google_vertexai import ChatVertexAI

llm = ChatVertexAI(model='gemini-pro')

from uqlm import BlackBoxUQ

bbuq = BlackBoxUQ(llm=llm, scorers=["semantic_negentropy"], use_best=True)

results = await bbuq.generate_and_score(prompts=prompts, num_responses=5)

results.to_df()White-Box Scorers

For token-probability-based scoring, use the WhiteBoxUQ class:

from langchain_google_vertexai import ChatVertexAI

llm = ChatVertexAI(model='gemini-pro')

from uqlm import WhiteBoxUQ

wbuq = WhiteBoxUQ(llm=llm, scorers=["min_probability"])

results = await wbuq.generate_and_score(prompts=prompts)

results.to_df()LLM-as-a-Judge Scorers

To evaluate responses using a panel of judges, you can implement the LLMPanel class:

from langchain_google_vertexai import ChatVertexAI

llm1 = ChatVertexAI(model='gemini-1.0-pro')

llm2 = ChatVertexAI(model='gemini-1.5-flash-001')

llm3 = ChatVertexAI(model='gemini-1.5-pro-001')

from uqlm import LLMPanel

panel = LLMPanel(llm=llm1, judges=[llm1, llm2, llm3])

results = await panel.generate_and_score(prompts=prompts)

results.to_df()Ensemble Scorers

For a more robust uncertainty estimate, you can use the UQEnsemble class:

from langchain_google_vertexai import ChatVertexAI

llm = ChatVertexAI(model='gemini-pro')

from uqlm import UQEnsemble

scorers = ["exact_match", "noncontradiction", "min_probability", llm]

uqe = UQEnsemble(llm=llm, scorers=scorers)

results = await uqe.generate_and_score(prompts=prompts)

results.to_df()Community and Contribution

UQLM thrives on community engagement and contributions. If you wish to contribute, you can:

- Report Bugs: Open an issue on GitHub with detailed information.

- Suggest Enhancements: Propose new features or improvements through GitHub issues.

- Submit Pull Requests: Fork the repository, make your changes, and submit a pull request following the contribution guidelines.

For more details, refer to the contributing guidelines.

License and Legal Considerations

UQLM is licensed under the Apache License 2.0, allowing for both personal and commercial use. Ensure compliance with the license terms when using or modifying the library.

Conclusion

UQLM is a groundbreaking tool for developers looking to enhance the reliability of Large Language Models through uncertainty quantification. With its comprehensive scoring methods, ease of installation, and active community, UQLM is poised to make a significant impact in the field of AI. Explore the library today and contribute to its growth!

For more information, visit the official documentation or check out the GitHub repository.

Frequently Asked Questions (FAQ)

What is UQLM?

UQLM is a Python library designed for detecting hallucinations in Large Language Models using advanced uncertainty quantification techniques.

How do I install UQLM?

You can install UQLM via pip with the command: pip install uqlm.

What are the main features of UQLM?

UQLM offers various scoring methods for uncertainty quantification, including black-box, white-box, LLM-as-a-judge, and ensemble scorers.

How can I contribute to UQLM?

You can contribute by reporting bugs, suggesting enhancements, or submitting pull requests on GitHub.

What license does UQLM use?

UQLM is licensed under the Apache License 2.0, allowing for personal and commercial use.

Source Code

For more information and to access the source code, visit the UQLM GitHub repository.